.png)

Software Engineering Intelligence

-

Intelligence Engine

On-demand exhaustive AI-analysis

-

Engineering Investment

Complete visibility into time & dollars spent

-

360º Insights

Create meaningful reports and dashboards

-

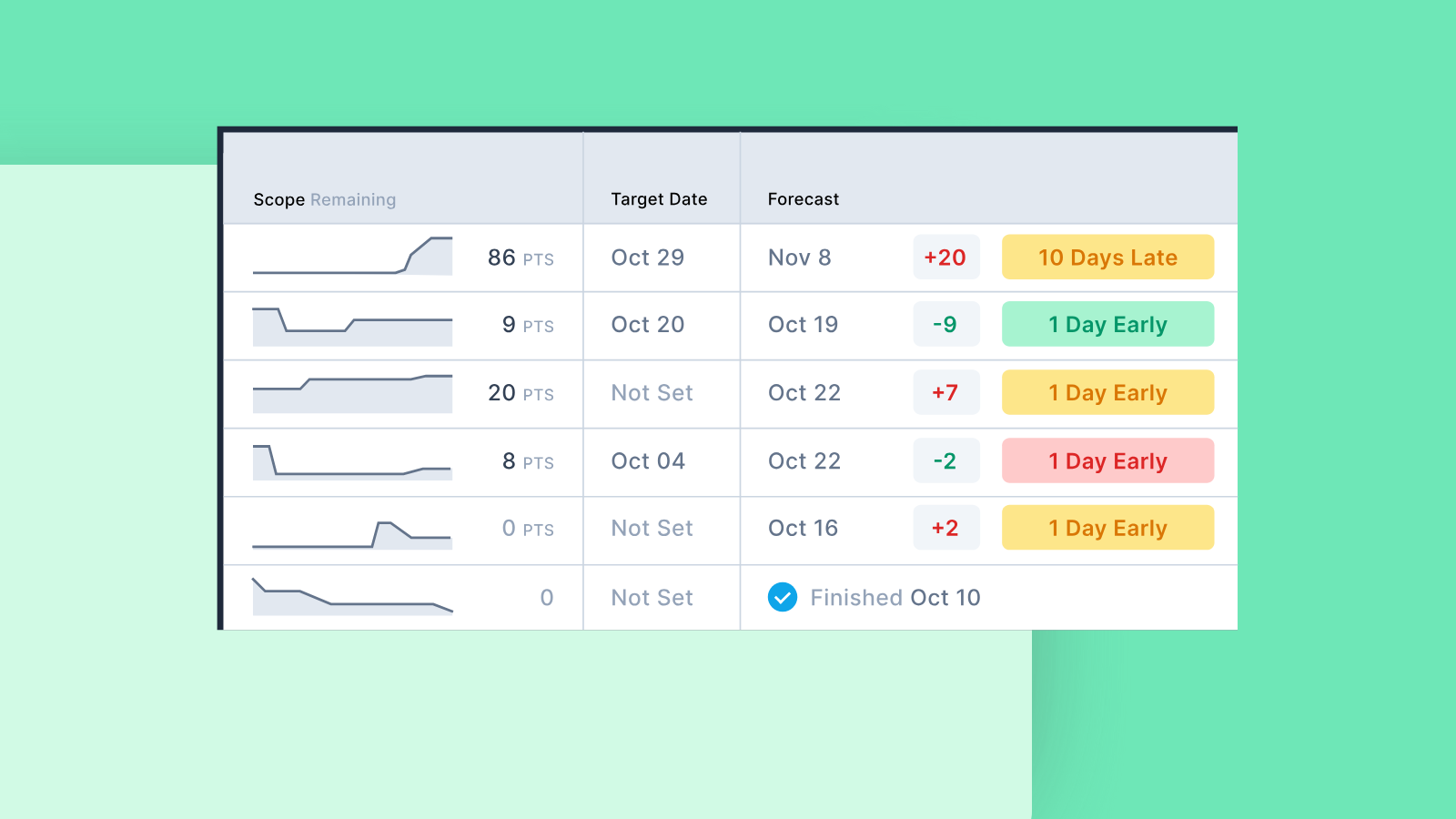

Project Forecasting

Track and forecast all deliverables

DevEx

-

Developer Surveys

Create and share developer surveys

Software Capitalization

-

R&D Capitalization Reporting

Align and track development costs

Your AI Transformation Roadmap: A CTO's Guide to Successfully Integrating AI Across Your Organization

After spending over a decade in machine learning and now leading AI integration at Allstacks, I've watched dozens of engineering teams navigate this transformation. The pattern is clear: teams that approach AI adoption strategically see transformative results, while those rushing in without a plan often create more problems than they solve.

Here's the roadmap that's working—not just for us at Allstacks, but for the most successful engineering organizations I'm seeing in the market.

The Reality Check: Why Most AI Adoption Fails

Before diving into tactics, let's address the elephant in the room. AI adoption follows the exact same patterns as every other transformative technology—DevOps, CI/CD, cloud migration. Yet somehow, leaders keep expecting different results.

The most dangerous assumption I see engineering leaders make is that they're magically going to get double productivity overnight. While you may be able to get there with work, training, and practice, that's not happening right out of the gate.

Here's what I'm seeing from teams that are struggling:

- They're adding AI tools to already overloaded roadmaps and expecting magic

- They're measuring only "time to write code" while ignoring the entire development lifecycle

- They have zero experimentation time because "we need to ship this quarter"

- They're cutting headcount because "AI will make us 2x faster"

Meanwhile, successful teams are taking a completely different approach.

Phase 1: Build Your Foundation (Weeks 1-4)

Step 1: Shift Your Mindset From Tool to Transformation

Stop thinking about AI as another integration. This is becoming as core to your business as your code repository. Computer science graduates are already coming out of school having never coded without AI assistance. In 18 months, every new hire will expect AI-enhanced workflows as standard.

Action Items:

- Frame AI adoption as foundational infrastructure, not experimental features

- Set expectations with your team that this is a 6-12 month learning curve

- Allocate dedicated time for experimentation—not "when you have spare cycles"

Step 2: Identify Your Champions

Don't drive this from the top down. The critical thing for building trust in AI tools is when someone your team already trusts vouches for them.

Action Items:

- Identify 2-3 engineers who are naturally curious about new technology (mix of senior and junior)

- Give them access to AI tools first with explicit permission to experiment

- Ask them to document what works and what doesn't—both successes and failures

Step 3: Create Safe Experimentation Space

This is where most leaders fail. They want the productivity gains without investing in the learning curve.

Action Items:

- Give selected champions a full week to implement a single feature using AI tools

- Remove all roadmap pressure and delivery deadlines during experimentation

- Make it clear that "failure" to see immediate productivity gains is expected and acceptable

- Document the workflows they develop—these become your playbooks

Phase 2: Scale Through Knowledge Sharing (Weeks 5-12)

Step 4: Run Champions-Led Lunch and Learns

Let your champions teach, not you. When a trusted peer shows how AI changed their workflow, it completely changes the conversation.

Action Items:

- Schedule weekly lunch and learns focused on specific tasks or workflows

- Have champions demonstrate real examples from your codebase, not hypothetical scenarios

- Allow time for questions and hands-on exploration during these sessions

- Record these sessions for team members who can't attend live

Step 5: Implement Structured Individual Experiments

Give every team member their own "AI project." I'm a fan of giving individuals the opportunity to pick a project and have a few days to a week to try it out.

Action Items:

- Let each developer choose their next feature to implement with AI assistance

- Provide 3-5 days of protected time with no other commitments

- Pair experimentation with documentation requirements—what worked, what didn't, time savings vs. challenges

- Share results across the team, including the failures

Step 6: Develop Your AI-Enhanced Workflows

Start building repeatable processes. The teams seeing real transformation aren't just using AI for autocomplete—they're building comprehensive workflows.

Action Items:

- Document the end-to-end process: spec creation in Claude/ChatGPT → implementation planning → code generation → review

- Create templates for providing context to AI tools (coding standards, architecture patterns, business logic)

- Establish code review processes specifically designed for AI-generated code

- Train your team on prompt engineering for better results

Phase 3: Measure and Optimize (Weeks 13-24)

Step 7: Track the Right Metrics

Stop measuring just "time to write code." You need visibility across your entire development cycle.

Action Items:

- Track code quality metrics: defect rates, maintainability scores, technical debt accumulation

- Monitor adoption rates: who's using AI tools, how often, for what types of work

- Measure developer experience: are people feeling more productive or more frustrated?

- Assess business impact: feature delivery times, bug rates, time to market

Step 8: Address Technical Debt Proactively

This is where AI can bite you. AI tools will implement whatever you ask them to do with limited context about your broader system architecture.

Action Items:

- Implement architectural context in every AI prompt

- Create explicit coding standards documentation for AI tool consumption

- Establish systematic code review processes for AI-generated code

- Monitor for patterns like duplicate imports, security vulnerabilities, or architectural inconsistencies

Step 9: Scale Across the Organization

Now you're ready to move beyond engineering. The force multiplier for your organization is going to be huge if you do this right.

Action Items:

- Train product managers to use AI for requirement writing and specification creation

- Show sales teams how to use AI for call scripts and customer briefs

- Help marketing teams leverage AI for content creation and campaign development

- Make AI-native tool selection the default for any new software purchases

Phase 4: Build AI-Native Products (Months 6-12)

Step 10: Rethink Your Product Strategy

If you're building a company today, it better be an AI company. This doesn't mean bolting AI features onto existing products—it means rethinking what becomes possible when AI is foundational.

Action Items:

- Audit your current product: what workflows could be fundamentally improved with AI integration?

- Ask the better question: "What becomes possible when AI is foundational to how our product works?" instead of "How do we add AI to our product?"

- Identify your unique data and domain expertise that can be fed into AI systems

- Avoid building features that base AI capabilities will make obsolete in 6 months

Step 11: Avoid the AI Gold Rush Trap

Don't build AI for the sake of AI. I see too many companies pushing to add AI features just to call themselves AI companies.

Action Items:

- Ask: "Does this AI feature solve a problem our customers actually have?"

- Ensure you're using unique data or domain expertise, not just base LLM capabilities

- Test whether your AI feature would still be valuable if competitors had the same base AI access

- Measure business outcomes, not just implementation speed

The Long-Term Competitive Reality

Here's what every founder and CTO needs to understand: the teams that are behind on AI adoption today will be two years behind their competitors by 2026.

This isn't just about productivity gains—it's about fundamental competitive advantage. Companies that don't think about AI integration from the beginning may need to raise 2-5x more money to accomplish the same business goals.

Your Next Steps

If you're reading this and haven't started your AI transformation yet, here's what to do this week:

- Identify your first 2-3 AI champions

- Block out experimentation time on their calendars—no other commitments

- Pick one specific feature or workflow for them to redesign with AI assistance

- Give them permission to "fail" while learning

The learning curve is real, but the competitive advantage is even more real. The teams that invest in proper AI adoption now will be the ones defining what's possible in software development over the next decade.

Remember: this isn't about replacing developers—it's about fundamentally changing how we think about problem-solving. The developers who can't imagine going back to writing code without AI assistance aren't using it because it's faster. They're using it because it's changed how they approach building software entirely.

That transformation is what you're really building toward.

Jeremy Freeman is CTO and Co-Founder of Allstacks, an AI-native Value Stream Intelligence platform. With over a decade of experience in machine learning and computer vision, he's leading AI integration across both Allstacks' engineering team and product development. Connect with Jeremy on LinkedIn for more insights on AI adoption in engineering organizations.